Legacy Flow - dump

Dump

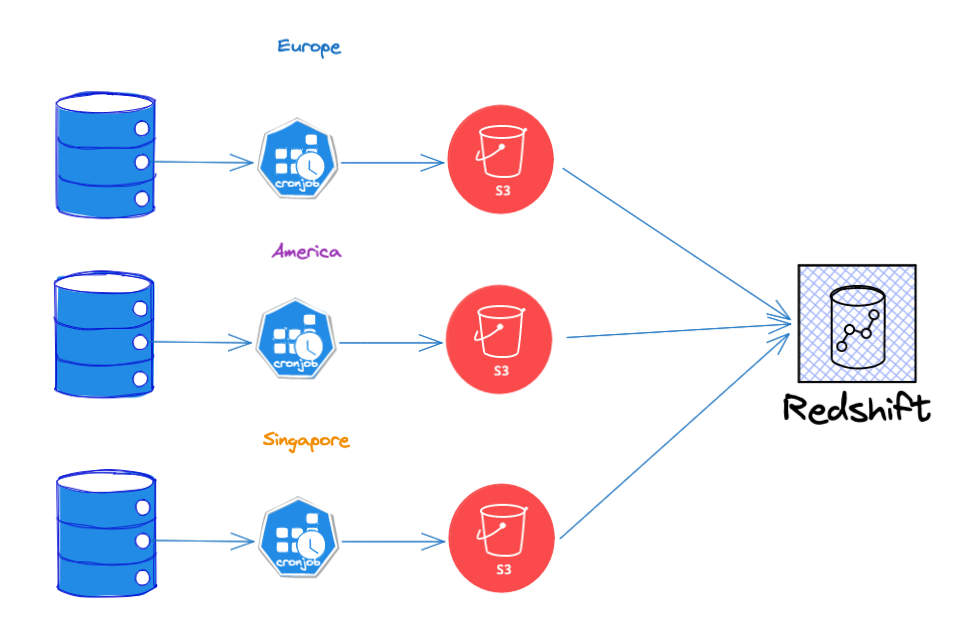

The old fashion way to export the favorite sport data to datalake use an SQL dump.

The data are dumped into a s3 bucket named prd-dct-wksp-uds-crm-sps under the path inputs/{TIMESTAMP}/{FILENAME}_{ZONE}-{TIMESTAMP}.dmp.

The dump is done every day and contains all the data stored by the application.

This is done by a dedicated K8S cron job provided by Member Infra team. Sport Team responsibility is to write dump SQL request and give access to S3 buckets.

The data ingestion into datalake tables is done by UserAnalytics team. This process is responsible to build 2 tables:

- The table with current favorite sports of members

- The history tables with the full history of favorite sports off members - containing deletion for instance

Technical deployment

This deployment is in europe, america and singapore zone. These deployment are described in the common flux repositories:

- prod-gke-eu ->

member-infra/dumps-script-pr/dumps-sp.yaml

member-infra/dumps-script-pr/dumps-sp.yaml - prod-gke-us ->

member-infra/dumps-script-pr/dumps-sp.yaml

member-infra/dumps-script-pr/dumps-sp.yaml - prod-gke-sg ->

member-infra/dumps-script-pr/dumps-sp.yaml

member-infra/dumps-script-pr/dumps-sp.yaml

Monitoring

Dump monitoring is based on logs and alert

An alert is raised when the dump doesn’t occur on the past day

Schema